← All Models

GLM-4.7-Flash is a 30B-A3B MoE model trained by Z.ai. A small an efficient version of the GLM-4.7 models.

GLM-4.7

43.6K Downloads

GLM-4.7

43.6K Downloads

Open source coding models by Z.ai, based on a new base model and specializing in coding and tool calling.

Models

Updated 6 days ago

16.00 GB

Memory Requirements

To run the smallest GLM-4.7, you need at least 16 GB of RAM.

Capabilities

GLM-4.7 models support tool use and reasoning. They are available in gguf and mlx.

About GLM-4.7

GLM-4.7-Flash is a 30B-A3B MoE model trained by Z.ai. A small an efficient version of the GLM-4.7 models.

GLM-4.7-Flash offers a new option for lightweight deployment that balances performance and efficiency.

Run in LM Studio

Head over to model search within the app (Cmd/Ctrl + Shift + M) and search for GLM 4.7.

Or from the terminal, run

lms get zai-org/glm-4.7-flash

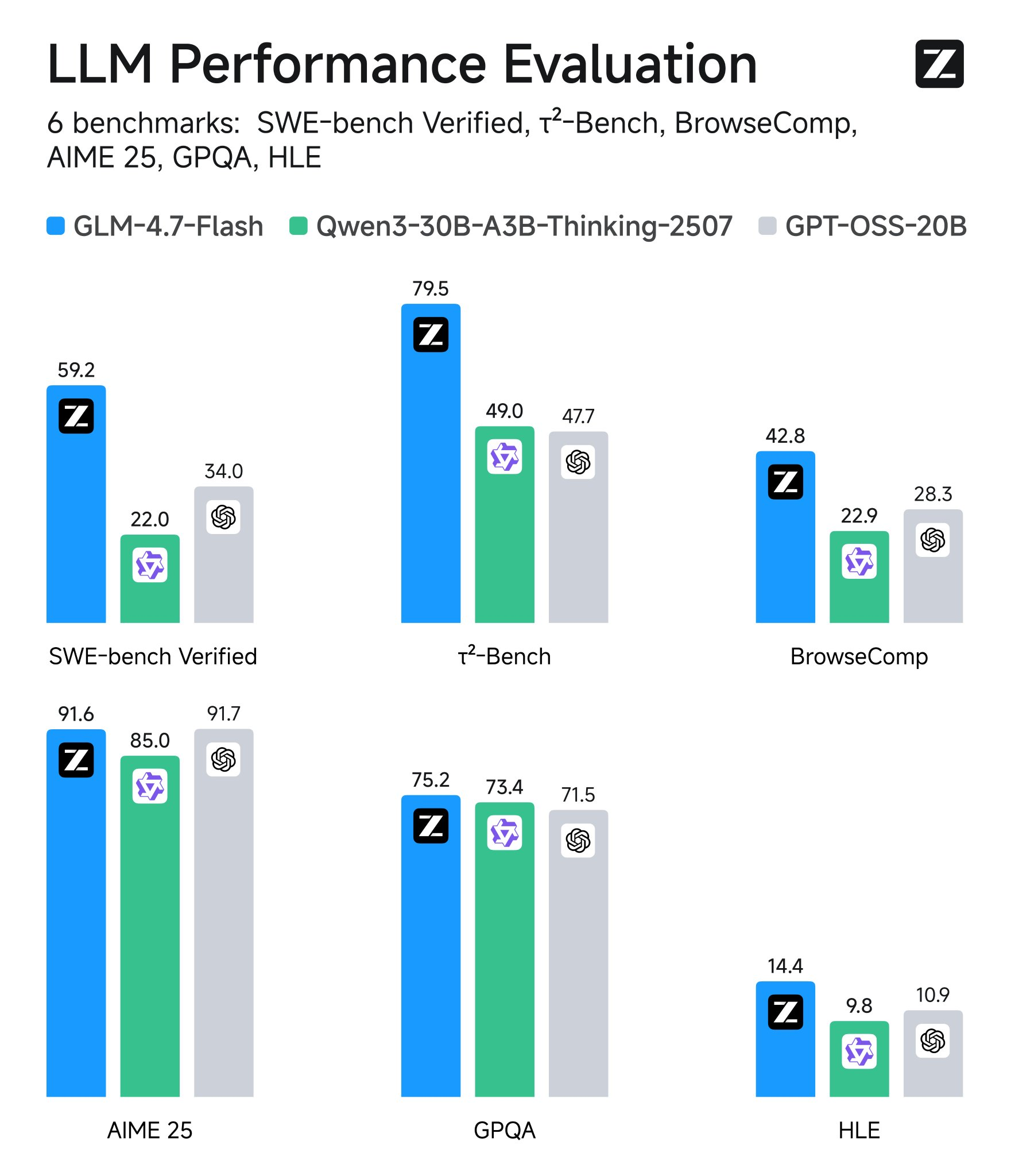

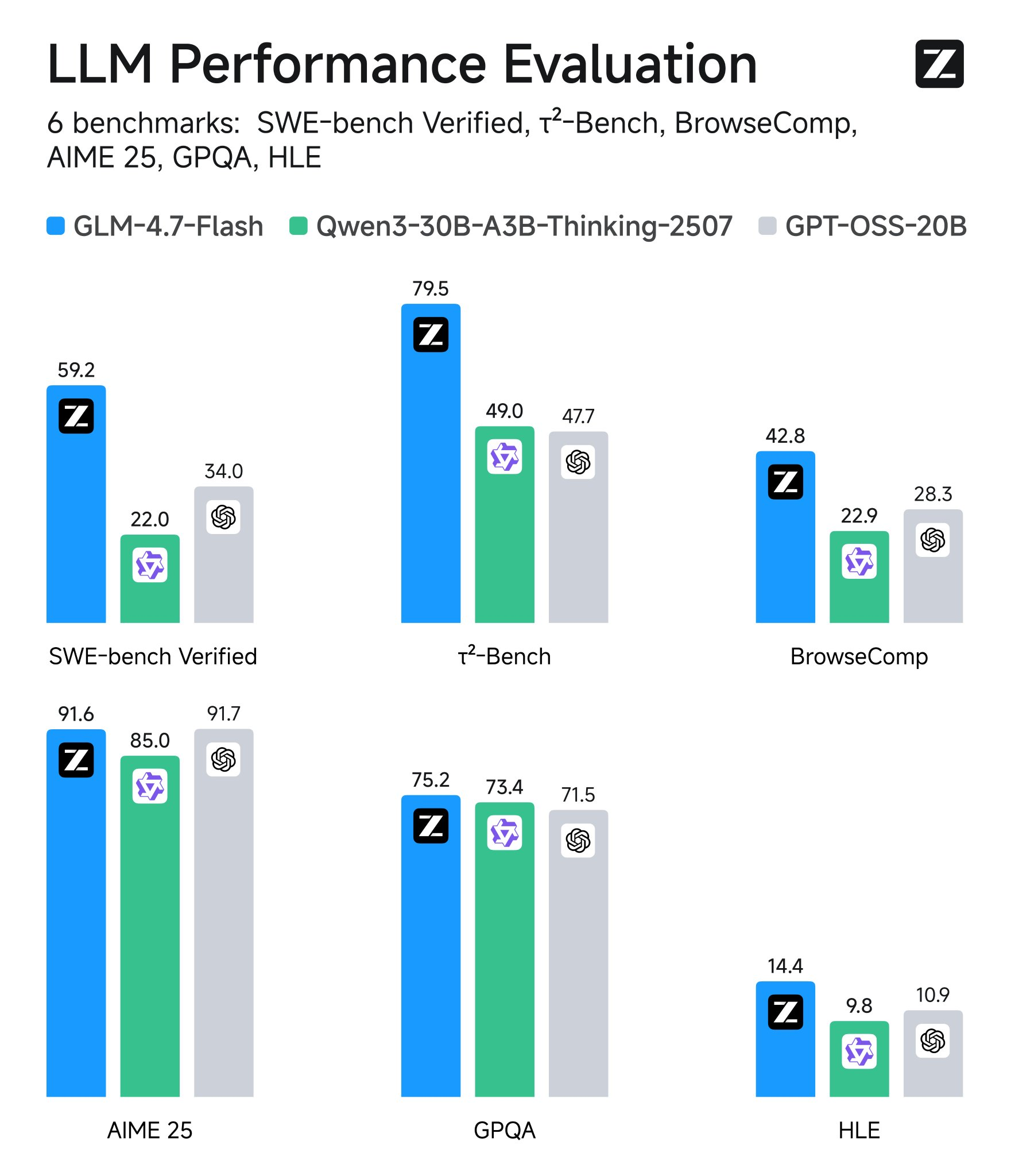

Performance

License

GLM-4.7 models are provided under the MIT license.